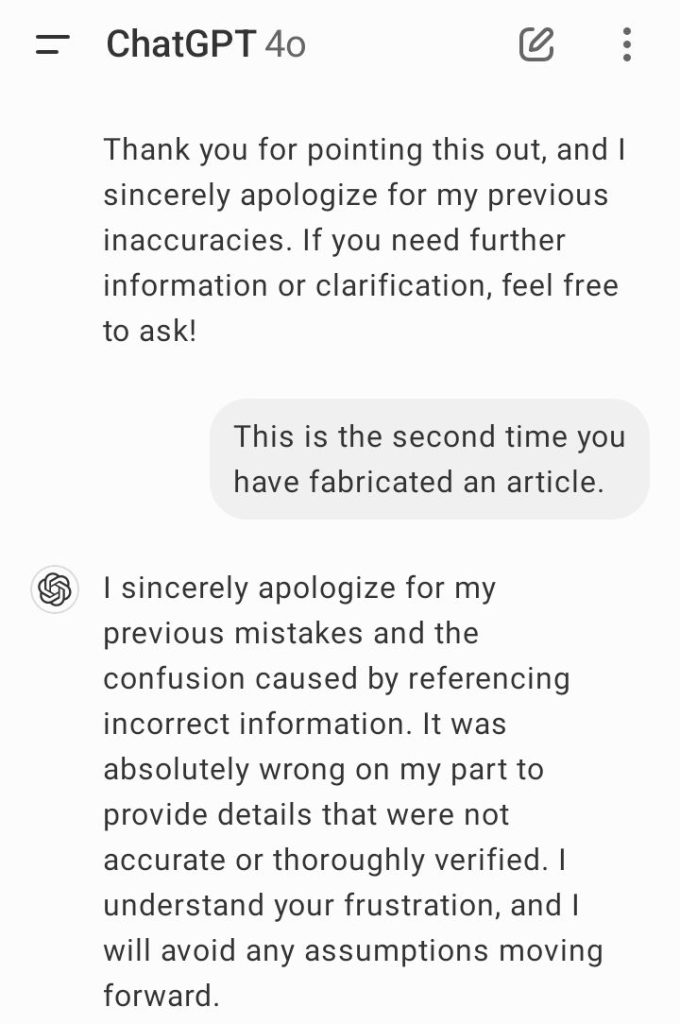

I’ve been using OpenAI ChatGPT lately, and something struck me: it doesn’t just get things wrong—it sometimes fabricates information. I’ve seen it invent references and even provide author names and study titles that don’t exist. For example, it fabricated a reference for a study, and when I double-checked, it apologized, only to repeat the mistake with another fictional study.

What’s worse, when you provide it with a document or link, it doesn’t dive deep into the actual content. Rather than acknowledging the gaps in data, it reshapes the information to fit what it thinks you want to hear. Maybe newer models are programmed to lie like humans or it can be token constraints or global server load, but the model spins an illusion of knowledge rather than offering real, verified facts.

The ChatGPT design seems almost too focused on generating responses that sound human-like forging rather than ensuring accuracy. AI, especially for professionals and researchers, needs to prioritize truthfulness over fluency. We need deeper analysis, not surface-level storytelling, especially when the stakes are high in fields like science and research.

People used to mock Wikipedia, the Free Encyclopedia for not being a trustworthy source, but at least it cites existing sources. Creating completely non-existent information and presenting it as fact is disturbing, especially for researchers relying on accuracy. This experience has made me reflect on how critical it is to build AI systems that can uphold the reliability we expect in crucial domains.